DLI Lab, Yonsei University

DLI Lab, Yonsei UniversityI'm an Integrated M.S./Ph.D. student at the Data & Language Intelligence Lab, Yonsei University, advised by Prof. Dongha Lee.

My primary research interests lie in structured data reasoning for language models (e.g., tabular and HTML data) and personalized aesthetic assessment (PAA), with an emphasis on fashion-related applications.

Warning

Problem: The current name of your GitHub Pages repository ("Solution: Please consider renaming the repository to "

http://".

However, if the current repository name is intended, you can ignore this message by removing "{% include widgets/debug_repo_name.html %}" in index.html.

Action required

Problem: The current root path of this site is "baseurl ("_config.yml.

Solution: Please set the

baseurl in _config.yml to "Education

-

Yonsei UniversityDepartment of Artificial Intelligence

Yonsei UniversityDepartment of Artificial Intelligence

M.S./Ph.D. StudentMar. 2025 - present -

Yonsei UniversityB.S. in Computer Science and EngineeringMar. 2020 - Feb. 2025

Yonsei UniversityB.S. in Computer Science and EngineeringMar. 2020 - Feb. 2025

Experience

-

Sinchon University Alliance IT Startup Club, CEOSWeb Front-end Part LeaderAug. 2025 - Jan. 2026

Sinchon University Alliance IT Startup Club, CEOSWeb Front-end Part LeaderAug. 2025 - Jan. 2026 -

College of Engineering Student CouncilVice PresidentDec. 2022 - May. 2023

College of Engineering Student CouncilVice PresidentDec. 2022 - May. 2023 -

Department of Computer Science and Engineering Student CouncilPresidentDec. 2021 - May. 2023

Department of Computer Science and Engineering Student CouncilPresidentDec. 2021 - May. 2023 -

College of Life Science and Biotechnology Dance Club, SHADOWSPresidentJan. 2021 - Jun. 2022

College of Life Science and Biotechnology Dance Club, SHADOWSPresidentJan. 2021 - Jun. 2022

Honors & Awards

-

Honors Award1st semester, 2021

-

Honors Award2nd semester, 2020

-

Highest Honors Award1st semester, 2020

Selected Publications (view all )

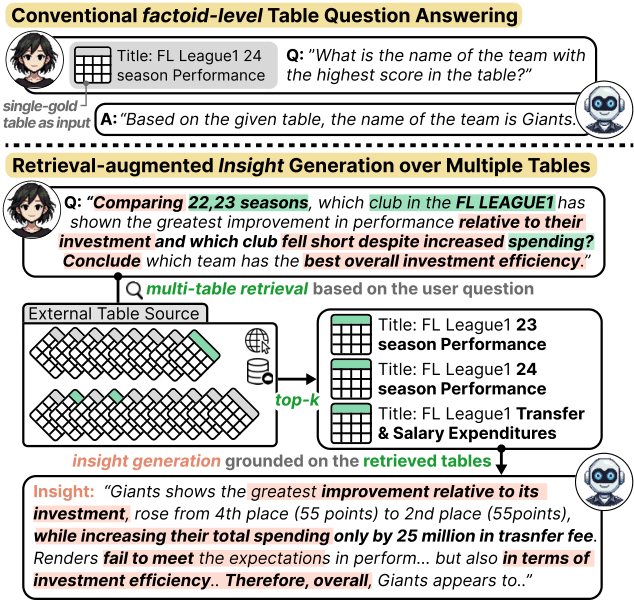

MT-RAIG: Novel Benchmark and Evaluation Framework for Retrieval-Augmented Insight Generation over Multiple Tables

Kwangwook Seo*, Donguk Kwon*, Dongha Lee# (* equal contribution, # corresponding author)

Annual Meeting of the Association for Computational Linguistics (ACL) 2025

Recent advancements in table-based reasoning have expanded beyond factoid-level QA to address insight-level tasks, where systems should synthesize implicit knowledge in the table to provide explainable analyses. Although effective, existing studies remain confined to scenarios where a single gold table is given alongside the user query, failing to address cases where users seek comprehensive insights from multiple unknown tables. To bridge these gaps, we propose MT-RAIG Bench, design to evaluate systems on Retrieval-Augmented Insight Generation over Mulitple-Tables. Additionally, to tackle the suboptimality of existing automatic evaluation methods in the table domain, we further introduce a fine-grained evaluation framework MT-RAIG Eval, which achieves better alignment with human quality judgments on the generated insights. We conduct extensive experiments and reveal that even frontier LLMs still struggle with complex multi-table reasoning, establishing our MT-RAIG Bench as a challenging testbed for future research.

MT-RAIG: Novel Benchmark and Evaluation Framework for Retrieval-Augmented Insight Generation over Multiple Tables

Kwangwook Seo*, Donguk Kwon*, Dongha Lee# (* equal contribution, # corresponding author)

Annual Meeting of the Association for Computational Linguistics (ACL) 2025

Recent advancements in table-based reasoning have expanded beyond factoid-level QA to address insight-level tasks, where systems should synthesize implicit knowledge in the table to provide explainable analyses. Although effective, existing studies remain confined to scenarios where a single gold table is given alongside the user query, failing to address cases where users seek comprehensive insights from multiple unknown tables. To bridge these gaps, we propose MT-RAIG Bench, design to evaluate systems on Retrieval-Augmented Insight Generation over Mulitple-Tables. Additionally, to tackle the suboptimality of existing automatic evaluation methods in the table domain, we further introduce a fine-grained evaluation framework MT-RAIG Eval, which achieves better alignment with human quality judgments on the generated insights. We conduct extensive experiments and reveal that even frontier LLMs still struggle with complex multi-table reasoning, establishing our MT-RAIG Bench as a challenging testbed for future research.